Note that these things may be considered as micro-optimization in desktop PC, but it’s noticeable in mobile.

Move your game computation in love.update, and keep love.draw solely for rendering.

Okay let’s see why. First, math computation in LuaJIT are compiled, which mean it’s fast. Second, calls to love.graphics api in LuaJIT are not compiled and break the code around that area to be not compiled. So instead of

function love.draw()

var1 = var1 + 20 -- this computation is done in LuaJIT interpreter

-- because calls to love.graphics.draw below can't be compiled

love.graphics.draw(image, var1 * 0.02, 0)

end

Do it like this.

function love.update()

-- this computation is done in machine code because

-- it's compiled.

var1 = var1 + 20 * 0.02 -- LuaJIT will optimize 20*0.02 to 0.4

end

function love.draw()

love.graphics.draw(image, var1, 0)

end

A note: This is less relevant for mobile (iOS and Android), as JIT compiler is disabled by default.

Use SpriteBatch, especially if it’s static.

Admit it. All calls to SpriteBatch object method will be never compiled. As of LOVE 11.0, there’s a feature called autobatching. That’s it. It automatically batches your draws, much like SpriteBatch with “dynamic” and “stream” mode.

Now, why use SpriteBatch for static batches? That’s because it’s better to call

love.graphics.draw once to draw many things than calling love.graphics.draw to draw each thing. The former saves CPU due to Lua to C API call overhead, allowing your game to run faster.

Only use pairs when you don’t have a choice.

Even with LuaJIT 2.1 trace stitching feature (allows uncompiled function like

love.graphics.draw not to break JIT compilation), pairs still breaks JIT compilation because it uses uncompiled bytecode. You can check what things that are compiled or not in here.If you really need to index by things other than number, store the key in numered array, index that, then use the value to index the actual table. Then you can use

ipairs or simple for loop to iterate the table (both are compiled). Something like this.myTableKey = {"key1", "key2","key3"}

myTableValue = {key1 = "Hello", key2 = "World", key3 = io.write}

for i, v in ipairs(myTableKey) do

local value = myTableValue[v]

-- do something with `value`.

-- It can be either "Hello", "World", or `io.write` function.

end

Oh also

pairs doesn’t guarantee the order they’re defined. When you run pairs in myTableValue above, key3 might be shown first, then key1, then key2.

Use io.write for simple debugging, not print.

print is not compiled, but io.write is. The downside of io.write is that you’ll lose of automatically separated values and object-to-string conversion feature. If you don’t care about this, you can use print. Well, this is solely a preference. If you need the full power of print, then use print. For very simple variable printing, use io.write.Never use FFI for computation optimization purpose.

Very true if you’re targetting mobile devices. Check out this chat I took from LOVE Discord server.

A: Will vector-light be faster than brinevector?That’s where the problem begins. Once you step into FFI, your phone will start to cry when running your code.

B: Depends. Brinevector is faster, but only on desktop. It’s terribly slow on mobile. Vector ligh (hump?) Is faster, but the code is messy since thats only for vector operations

A: if I made an FFI version of vector-light that was just pure functions, it would be faster right?

Wait, based on benchmark, FFI is faster than plain Lua table.That kind of argument doesn’t work once you have JIT compiler disabled. I’ve tried to benchmark it that in most cases FFI is ~20x slower than plain Lua when JIT compiler is disabled. Then what’s the matter for mobile devices? Take a look at first tips above. Mobile devices have JIT compiler disabled by default, which means slow FFI.

Well, you shouldn’t use FFI in mobile devices at all anyway. Starting from Android 7, any calls to

ffi.load will just fail due to changes in Android 7 dlopen and dlsym which restrict those function greatly that it makes ffi.load cease to function. And in iOS, I don’t see the point of using FFI as all things must be statically compiled.

Switch the JIT compiler before love.conf is called in conf.lua

You may ask yourself. Why? The answer is, LOVE utilize some FFI call optimizations when it detect it runs with JIT compiler enabled, mainly in

love.sound and love.image modules, and the detection is done before main.lua is loaded. Some people did this wrong by turning the JIT compiler on/off in main.lua, which can lead to slower performance.Conclusion

Those kind of optimization is mostly helps in CPU optimization. For GPU, you need to reduce drawcalls, reduce canvas usage (or don’t use it at all), and use compressed texture. If it’s still slower, but the CPU stays low, that indicates you’re fillrate-limited, in which case, any optimization in here doesn’t really help you at all.

Note that I may still wrong, so if you have something to add, or something is invalid in above tips, just comment below to discuss it.

|

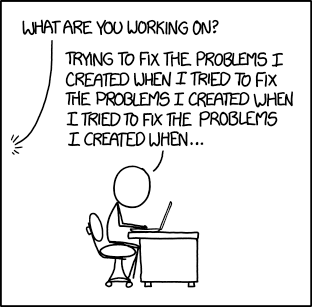

| 'What was the original problem you were trying to fix?' 'Well, I noticed one of the tools I was using had an inefficiency that was wasting my time.' |

Also, did I also mention that JIT compiler for ARM64 is unstable?